The Third Angle

Best Business Podcast (Gold), British Podcast Awards 2023

How do you build a fully electric motorcycle with no compromises on performance? How can we truly experience what the virtual world feels like? What does it take to design the first commercially available flying car? And how do you build a lightsaber? These are some of the questions this podcast answers as we share the moments where digital transforms physical, and meet the brilliant minds behind some of the most innovative products around the world - each powered by PTC technology.

The Third Angle

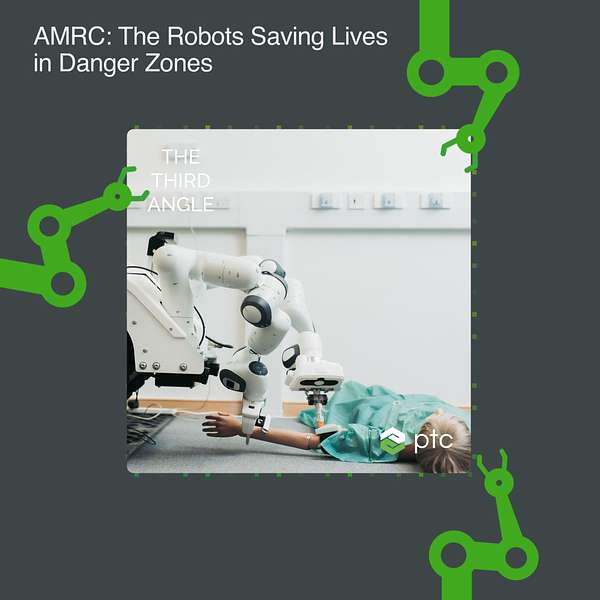

AMRC: The Robots Saving Lives in Danger Zones

Use Left/Right to seek, Home/End to jump to start or end. Hold shift to jump forward or backward.

“Ultimately, we will be looking to save lives, I think, within one to two years that that would be a reality.”

If you are injured in a disaster zone, it is critical that you receive medical care as urgently as possible. But what if the environment is not safe for medics to enter? Either because it is a war zone or because there are hazardous materials around? That’s where AMRC’s VR technology can help.

The Advanced Medical Robotics Centre at the University of Sheffield, UK has created pioneering robotics technology to get medics into difficult-to-access areas to triage patients. Using medical telexistence (MediTel) technology, medics can operate a remote controlled robot to reach the patients, and wear a virtual reality headset which places them in that environment. The robots are equipped with medical devices which allow the medics to carry out checks including taking the patient's pulse, administering pain relief and palpitating their abdomen. Meanwhile the headset is so realistic that it feels like the user is there on the ground, with the view from the robot moving in real time as they move their heads. AMRC is hoping to use this technology in the next 1-2 years to save lives in dangerous environments.

In this episode we head to Sheffield to visit AMRC’s Digital Design Lab to meet David King, who demonstrates how realistic their MediTel VR technology is.

Find out more about AMRC here

Your host is Paul Haimes from industrial software company PTC.

Episodes are released bi-weekly. Follow us on LinkedIn and Twitter for updates.

Third Angle is an 18Sixty production for PTC. Executive producer is Jacqui Cook. Sound design and editing by Ollie Guillou. Location recording by Helen Lennard. And music by Rowan Bishop.

Welcome to Third Angle, where mini remote-controlled tanks deliver emergency medical care. I’m your host, Paul Haimes from industrial software company PTC. In this podcast we share the moments where digital transforms physical, and meet the brilliant minds behind some of the most innovative products around the world, each powered by PTC technology.

Whether caught up in the midst of a natural disaster or trapped in a hazardous location, some medical emergencies are a danger not only to the patient but to the medics who are called upon to save them.

So what if you could send a robot medic in to scout the dangers before a human even steps on the scene? One that can deliver first-line medical care, check vitals, and even deliver medicine? That would be game-changing.

Well, let me introduce you to Meditel, a first-of-its-kind product with the potential to save lives, developed by the University of Sheffield Advanced Manufacturing Research Centre (AMRC).

We sent our producer Alisdair to meet David King, head of digital design at the AMRC, where he test-drove Meditel in a sensory VR simulation.

So you’ve got essentially two VR controllers left and right. And the controllers are essentially like a tank controller. Then you’ve got the VR headset. So that’s giving you the view, essentially from the 360 camera, and you’re driving around the forest.

I can actually hear the grass under my wheels.

So this is the Digital Design Lab. So this is where we effectively built and prototyped Meditel. So we look at a whole range of different technologies in here, everything from electronics to software design to robotics, and do a lot of work in immersive technology. So looking at how we can use augmented reality, virtual reality, to improve design processes and improve interfaces to systems. This is where it was built, where we put it together. The project was an extremely challenging project, it was nine months from start to end.

We applied and won a defence competition. The Defence and Security Accelerator (DASA) was a project that was sponsored by the Defence Science and Technology Laboratory (Dstl) and the Nuclear Decommissioning Authority (NDA). They wanted people to prototype technologies for teleexistence. So telepresence is another way of describing it. So it’s essentially where you have a remote operator, in this case with a VR headset, controlling a robotic autonomous system, and to give that immersive presence to the remote operator. So they essentially feel as if they are at that location. So we developed all aspects of it, the operator interface, the robotics system, and the use case we were looking at was around trying to triage casualties in a hostile environment. It could be chemical, it could be biological, but essentially where you’ve got situations where you have casualties, and it’s dangerous to send in medics. Things like earthquakes, potentially, so where you’ve got humanitarian disasters, it could be something like a terrorist incident where there could potentially be explosives still in the environment. And in those situations, they wanted to be able to send in systems like this robotic system where they could go in and start to perform a basic triage on casualties while making sure that the medics are in a safe zone away from the dangerous area. And that was the brief.

We were given certain triage tasks we had to complete, so we had to take the temperature, we had to be able to remotely communicate with the patient, we had to apply a painkiller, take a pulse, and also do a palpation. So essentially, remotely feel the abdomen of the casualty for any objects that might be there. We put all this together with a number of different sensors and camera systems, and ultimately, at the end of the project, we demonstrated this to various stakeholders in defence from the MoD to Dstl, to a whole range of people, and it’s been really well received.

The AMRC as a whole was formed just over 20 years ago and it was really very much focused on improving manufacturing techniques for aerospace, so Boeing were one of the early partners. It’s progressed significantly from looking at the whole manufacturing process; over a number of years now very much focused on digital technologies associated with manufacturing. So Industry 4.0 is a bit of a buzzword that’s thrown around quite a lot, and it’s really all the technologies associated with that. So it’s robotics and future manufacturing techniques. So we would work with partners to prototype and develop those technologies.

We work on everything from aerospace to medical projects to space projects and defence projects. We’ve got an example here of a CubeSat launch, a prototype. So we have various kinds of medical tools and standard medical devices that the robot can automatically pick up. In this case, we’ve got a standard auto-injector, an off-the-shelf infrared thermometer, and we have a blood pressure cuff as well, which we can put on there. But there are actually some tools that we had to develop ourselves. So in terms of remotely performing an examination of a casualty's abdomen, that’s not an off-the-shelf piece of kit. So we actually developed something called a tac tip. And it was a tactile sensor that would feed information back through to the operator. So they would be able to identify if there were any unknown objects, or unexpected objects, in the casualty's abdomen such as shrapnel. Yes, yes. So as you can see, there’s a soft rubber tip, and as that deforms over the surface, you can start to build up a remote impression of the surface itself. And that was a feedback mechanism that allowed us to identify any obstructions or unexpected objects on the casualty.

All the communication is via a very, very high bandwidth millimetre wave communication. So it’s similar technologies to 5G. So everything is being passed through that link. That’s the control system for the robot itself, we’ve got a number of different camera systems on there. Sat on the top of the UGV itself, we’ve got a 360 camera. So we stream that through into the virtual reality headset. So effectively, it feels, for all intents and purposes, as if the operator was effectively sat on top of the UGV itself. They can move their head around and their view moves around with them.

I imagined it flying, like a drone.

Yes, it’s quite a heavy thing. It’s probably around 400kg. So we partnered with a company that provided a tracked platform. So that was our base for the unit.

It’s a bit like a mini tank.

Essentially, yes. It’s a very, very rugged platform. The tracked vehicle was essentially developed for agriculture purposes. It was, if you like, like a remote control tractor vehicle, and we’ve had to build all the intelligence and the sensors and everything on top of that. But we started with something which was really fit for the environment. This thing is designed for going around farms and pretty rugged terrain.

We have one further funding at the minute that’s quite focused on the operator interface side. So we’re actually looking at new sensor technologies to monitor the user in terms of how fatigued they are getting. One thing to consider with these things is, people wear VR headsets, but they can be quite short-term – they can be 30 minutes an hour. Some of these missions could be multiple hours, so 6, 7, 8 hours. And there’s work there to understand how we do that. How do we enable operators to work for extended periods within these kinds of immersive environments? People still experience motion sickness and things like that. It’s also the environment that these things will be working in. It is designed for emergency situations so they can be quite intense, stressful environments. You’ve got potentially multiple casualties that you’re trying to go out, triage and assess. And doing that over a long period can be quite intense.

The brief we were given was that it would actually be a remote medic. So they will be medically trained. In terms of what it could be, it could actually be multiple operators, it could be an operator that gets the robot platform to where it needs to be, but then they hand over to a medic to actually perform a triage. So then you’re utilising the medic for what they’re good at – medical procedures. The NHS has specific teams for large-scale, mass-casualty incidents. So, they have this specific teams for that, and they would be an obvious user for the system. So if a large incident was declared, they would contact this team, a bit like the SEAL team, that kind of thing. So they would arrive on the scene and assess, and they have specialist equipment to deal with these situations. This MediTel would be a potential piece of their equipment, one of their tools, if you like, that they could deploy in these situations.

These systems would typically be part of a larger system. In terms of practically implementing it, we suspect you would have a companion drone system. So this would arrive at a scene in a medical emergency of some description, the drone would go out and survey the area, identify casualties, and while the drone is doing that, the system is starting to travel to the casualties and then start to triage. That is the first step of what we think is a much larger system.

So we have been told by the MOD that this was the first time that they’d seen a system like this as a fully integrated system. They’ve seen elements of this, they’ve seen standalone robotic systems and mobile platforms. But this was the first time really that they’d seen everything brought together into a platform that was effectively suitable for the environment within which it was being asked to work. And so we were hopeful that within the next one to two years we could potentially deploy this system. Ultimately, we will be looking to save lives. That’s what we want to do with this system, what it’s capable of. And it’s realistic, I think, within one to two years that that would be a reality.

Time to meet our expert, Brian Thompson, who heads up PTC’s CAD division.

Brian, here we have a great example of how the AMRC, a world leader in manufacturing excellence, has used the power of Creo to bring lifesaving ‘Robo Doctor’ technology closer to market. They used Creo’s integrated simulation capabilities to validate the design to ensure the product was ready for manufacturing, all within a matter of weeks. Brian, we’ve spoken about Creo on previous episodes, but can you give us a summary of the importance of validation during the design process?

Yeah, sure. I could definitely see how this is valuable for the development of something that's so cutting edge like a next generation 3d printer. It's time to market and really, really precise engineering is going to be really important for something this complex with so many different engineering factors.

You know, when you're designing something like a piece of manufacturing equipment that is melting metal together to make parts. There's all sorts of requirements from, you know, mechanical requirements, the thermal requirements, and the simulations can get pretty complex. So teams like this are going to have simulation experts that are going to be focused on some really, really high level simulations, but there's also going to be a pretty wide array of We will call them in process simulations that need to occur as the design is being developed.

And what we want is we want design engineers to be able to accomplish those simulation tasks as part of their design process. So that the simulation experts at VulcanForms can focus on the more complex multi physics, you know, even highly nonlinear problems that are going to be relevant in developing a piece of added manufacturing equipment like Vulcan forms is developed here.

So Creo simulation live is really, really well suited. To the task of providing in process design guidance. It's actually quite robust simulation, but more focused on individual physics like structural simulation or thermal simulation or modal simulation that is a lot more intuitive and relevant for the design engineer.

And so CREO Simulation Live gives the design engineer the type of tool that they can use right there as part of the design process. It's practically instantaneous because it works on the graphics card and uses a brand new technology developed by our partners at ANSYS. And it is really, really well suited to in process design simulations that, design engineers can accomplish without, uh, putting an, You know, an overload on the simulation team at a company like Vulcanform.

So we're excited to see Vulcanforms take advantage of CREO Simulation Live in this way. It's exactly what we intended for Creo Simulation Live. And, and frankly, it's exactly why, um, this real time simulation technology inside Creo has been a strong, strong driver for a lot of, a lot of interest from our customer base over the last several years.

Thanks to Brian and to David for giving us a glimpse into Meditel's incredible, life-saving capabilities.

Please rate, review and subscribe to our bi-weekly Third Angle episodes wherever you listen to your podcasts and follow PTC on LinkedIn and Twitter for future episodes.

This is an 18Sixty production for PTC. Executive producer is Jacqui Cook. Sound design and editing by Ollie Guillou. Recording by Alisdair McGregor. And music by Rowan Bishop.